Author: Robertson, David

Conceptual Article | Open Source

Revised: 2025 February

Published Online: 2024 June – All Rights Reserved

APA Citation: Robertson, D. M. (2024, June 26). Epistemic Rigidity: A Theoretical Framework for Understanding Cognitive Barriers to Knowledge Advancement. DMRPublications. https://www.dmrpublications.com/epistemic-rigidity/

Download the PDF here.

Abstract:

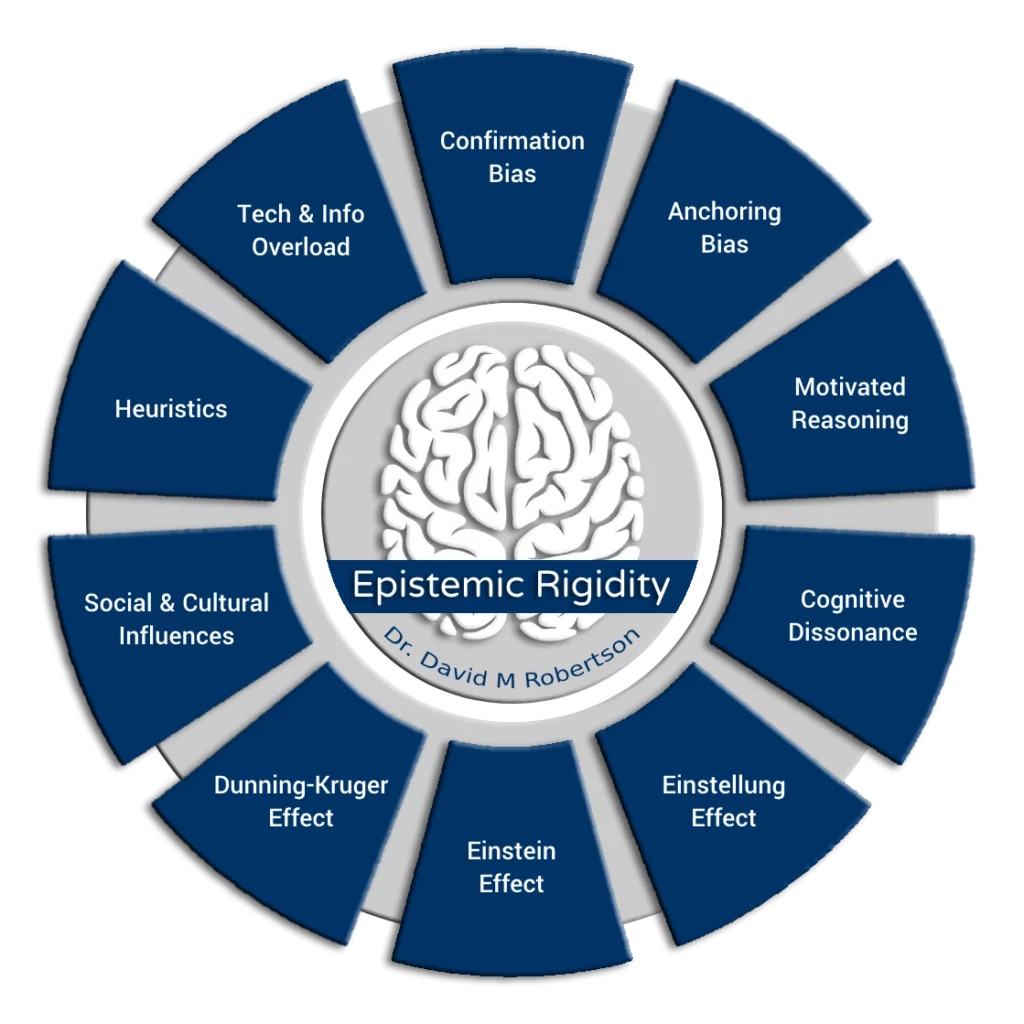

This paper presents Epistemic Rigidity, a theoretical framework that integrates multiple cognitive biases to explain individuals’ difficulties in discarding inaccurate information and advancing their knowledge. The framework incorporates the Einstellung effect, Einstein effect, Dunning-Kruger effect, anchoring bias, and additional cognitive and social factors such as confirmation bias, motivated reasoning, cognitive dissonance, heuristics, and the impact of information overload. By examining these interrelated phenomena, Epistemic Rigidity offers a comprehensive understanding of the cognitive barriers to knowledge advancement. This framework applies across various educational, professional, and organizational contexts, providing valuable insights into fostering environments that promote continuous learning, critical thinking, and adopting new ideas. The paper also outlines practical strategies for mitigating these biases, emphasizing the importance of intellectual humility, reflective practice, and diverse information sources.

Keywords: Epistemic Rigidity, cognitive biases, Einstellung effect, Einstein effect, Dunning-Kruger effect, anchoring bias, confirmation bias, motivated reasoning, cognitive dissonance, heuristics, information overload, knowledge advancement, critical thinking, continuous learning, intellectual humility.

Problem Statement

In contemporary education and professional practice, individuals frequently encounter challenges in updating their knowledge and practices or will avoid the knowledge despite the availability of new information (Golman et al., 2017; Sweeny et al., 2010). This behavior is now considered to be fairly common (Narayan et al., 2011). However, this phenomenon, often attributed to cognitive biases and social influences, impedes intellectual growth and innovation across various disciplines (Dai et al., 2020; Golman et al., 2017; Howell & Shepperd, 2013). Understanding the underlying mechanisms behind this reluctance to discard outdated information is crucial for developing strategies promoting continuous learning and adopting evidence-based practices.

Materials and Methods

This paper proposes a theoretical framework termed Epistemic Rigidity to elucidate the cognitive barriers to knowledge advancement. The framework integrates established cognitive biases, such as the Einstellung effect, Einstein effect, Dunning-Kruger effect, and anchoring bias, alongside additional factors, including confirmation bias, motivated reasoning, cognitive dissonance, and heuristics. A comprehensive review of literature from psychology, education, medicine, and organizational behavior informs the development of this framework, emphasizing the interplay between individual cognition and social dynamics in perpetuating outdated knowledge. Case studies and empirical examples illustrate the application of Epistemic Rigidity across educational, professional, and organizational contexts.

Key Stages

Introduction

Epistemic Rigidity refers to the cognitive phenomena that impede individuals from discarding inaccurate information and advancing their knowledge with more accurate information. This framework integrates several cognitive biases, social and cultural influences, motivated reasoning, cognitive dissonance, heuristics, and the impact of information overload. By understanding these interrelated biases and factors, we can better comprehend why people often struggle to update their beliefs and practices. The following outlines the Epistemic Rigidity theory, explores its significance, and discusses its applications across various fields.

Core Cognitive Biases and Phenomena of Epistemic Rigidity

To understand the concept of Epistemic Rigidity, we must first understand the underlying cognitive biases and phenomena that contribute to this inflexible thinking. Individually, each component describes a small piece of a much bigger puzzle. Together, these biases and phenomena create a robust mental framework resistant to change, often hindering the ability to update and refine knowledge based on new, more accurate information. This section explores the core cognitive mechanisms that drive Epistemic Rigidity, examining how each contributes to the difficulty of discarding outdated or incorrect information.

The Einstellung Effect

The Einstellung effect describes the tendency to rely on familiar solutions, even when better options are available (Tresselt & Leeds, 1953). This cognitive bias can lead to inflexibility in thought and problem-solving, particularly among experts with deeply ingrained knowledge and practices (Bilalić et al., 2010; Ellis & Reingold, 2014).

The Einstein Effect

The Einstein effect highlights the undue credibility granted to information coming from authoritative or respected sources, such as scientists or experts (Hoogeveen et al., 2022). This bias, which is also known as also known as the authority bias, can lead to the uncritical acceptance of information, perpetuating inaccuracies and outdated knowledge (Blass, 1991; Miller & Rosenfeld, 2010; Rebugio, 2013).

The Dunning-Kruger Effect

The Dunning-Kruger effect refers to overestimating one’s competence due to limited knowledge (Dunning, 2011; Schlösser, Dunning, Johnson, & Kruger, 2013). Novices often lack the metacognitive awareness to recognize their own limitations, leading to overconfidence (Dunning et al, 2003; Sanchez & Dunning, 2018).

Anchoring Bias

Anchoring bias occurs when initial information heavily influences subsequent judgments and decisions (Lieder et al., 2018). The first piece of information received about a topic can create a cognitive anchor, making it challenging to revise beliefs in light of new evidence (Chapman & Johnson, 1994).

Confirmation Bias

Confirmation bias, which presents in several ways, involves favoring information that confirms pre-existing beliefs while disregarding information that contradicts them (Klayman, 1995; Oswald & Grosjean, 2004). This bias reinforces existing misconceptions and impedes the acceptance of new information (Lehner et al., 2008; Nickerson, 1998).

Social and Cultural Influences

Social and cultural factors play a crucial role in shaping knowledge and beliefs (Cialdini & Goldstein, 2004; Greif, 1994; Shore, 1998). Peer pressure, societal norms, and cultural traditions can reinforce certain biases and impede the acceptance of new information (Berkowitz, 2004; Gass & Seiter, 2022; Lewandowsky et al., 2012; Markus & Kitayama, 2014; Wood, 2000).

Motivated Reasoning

Motivated reasoning is the tendency to fit new information into pre-existing frameworks based on emotional or motivational factors (Carpenter, 2019). Personal motivations and emotions can significantly impact the acceptance of new information (Kahan, 2013; Kruglanski & Webster, 2018).

Cognitive Dissonance

Cognitive dissonance is the mental discomfort experienced when holding two conflicting beliefs (Harmon-Jones, 2000). This discomfort can lead individuals to rationalize and cling to their existing beliefs, even in the face of contradictory evidence (Dhanda, 2020; Elliot & Devine, 1994; Harmon-Jones & Mills, 2019).

Heuristics and Mental Shortcuts

People often rely on heuristics or mental shortcuts to make decisions quickly (Dale, 2015; Gigerenzer & Gaissmaier, 2011). While these can be efficient, they can also lead to biases and errors in judgment, contributing to cognitive rigidity (Sunstein, 2003).

Technological and Information Overload

Today, people are faced with artificial intelligence, toxic tribalism, social media algorithms, legacy media, the internet, and many other forms of information that they are required to examine. The vast amount of available information can overwhelm individuals, leading to selective information processing (confirmation bias, cognitive filtering, biased assimilation) and reinforcing existing biases (Datta, Whitmore, & Nwankpa, 2021; Schmitt, Debbelt, & Schneider, 2018; Smith, 2002).

It should also be noted that social media and search engine algorithms exacerbate cognitive biases and contribute to Epistemic Rigidity by creating echo chambers and filter bubbles, which selectively expose individuals to information that reinforces their existing beliefs while filtering out dissenting views (Azzopardi, 2021; Datta, Von der Weth et al., 2020; Whitmore, & Nwankpa, 2021). Not only does this exacerbate toxic tribalism, but this selective exposure strengthens confirmation bias and makes it increasingly difficult for individuals to encounter and consider diverse perspectives, thus contributing to the entrenchment of their current knowledge and beliefs (Carson, 2015; Kozyreva, Lewandowsky, & Hertwig, 2020; Messing & Westwood, 2012).

Epistemic Rigidity Theory Explained

Epistemic Rigidity theorizes a strong interplay of various cognitive biases and phenomena, each reinforcing the others to create a robust framework resistant to change. The Einstellung Effect demonstrates this by causing individuals to default to familiar solutions, limiting their openness to new, potentially better alternatives. When combined with the Einstein Effect, which grants undue credibility to authoritative sources, individuals are further inclined to accept and cling to established information, regardless of its accuracy.

The Dunning-Kruger Effect compounds this rigidity by fostering overconfidence in those with limited knowledge who lack the metacognitive skills to recognize their shortcomings. Anchoring Bias then cements initial information as a reference point, making it challenging to adjust beliefs even when presented with new evidence. Confirmation Bias further reinforces this anchoring, which drives individuals to seek information that supports their existing beliefs, dismissing contradictory data.

Social and cultural influences, including peer pressure and societal norms, bolster these cognitive biases by creating an environment where certain beliefs are continually reinforced. Motivated reasoning further entrenches these beliefs by aligning new information with pre-existing emotional and motivational frameworks. Cognitive dissonance adds another layer, as individuals experience mental discomfort when confronted with conflicting information, often leading them to rationalize and adhere to their original beliefs.

Heuristics and mental shortcuts, while useful for quick decision-making, can also lead to errors in judgment, perpetuating cognitive rigidity. Finally, the modern challenge of technological and information overload exacerbates these issues, as individuals are bombarded with vast amounts of information, leading to selective processing that reinforces existing biases.

Together, these cognitive biases and phenomena create a self-reinforcing system of Epistemic Rigidity. Hence, Epistemic Rigidity Theory suggests that obstacles to knowledge acquisition stem not from a single barrier but from a robust and intricate framework of layered obstacles that intensify based on the strength of each layer. Essentially, while each of the contributing theories and biases explains part of the problem, collectively, they paint a clearer picture of a complex mental framework that resists updating and refining knowledge, ultimately impeding intellectual growth and the pursuit of more accurate understanding.

Interesting Interplay of Cognitive Biases

The theory of Epistemic Rigidity posits that cognitive biases do not operate in isolation. Instead, they interact and reinforce each other, creating a robust and self-perpetuating framework that impedes the advancement of knowledge. Understanding these interactions is crucial for comprehending the full extent of cognitive barriers that individuals face when attempting to discard outdated information and adopt new, more accurate knowledge.

The following section outlines the bidirectional relationships between key cognitive biases, demonstrating how each bias can strengthen and perpetuate the others. However, these are just a few examples of the many interplays already identified. By examining these interplays, we gain a deeper insight into the complexity of cognitive rigidity and the multifaceted nature of the obstacles to intellectual growth.

- Confirmation Bias and Anchoring Bias: Confirmation bias reinforces initial beliefs shaped by anchoring bias, while anchoring bias provides a strong reference point that confirmation bias subsequently supports.

- Motivated Reasoning and Cognitive Dissonance: Motivated reasoning helps individuals integrate new information into existing frameworks to alleviate cognitive dissonance, while cognitive dissonance drives motivated reasoning to reduce mental discomfort caused by conflicting beliefs.

- Einstellung Effect and Anchoring Bias: The Einstellung effect leads to reliance on familiar solutions, serving as anchors, while anchoring bias reinforces these familiar solutions, making it difficult to consider new alternatives.

- Einstein Effect and Confirmation Bias: The Einstein effect, granting undue credibility to authoritative sources, enhances confirmation bias by favoring information that aligns with pre-existing beliefs. Conversely, confirmation bias strengthens the acceptance of information from these sources.

- Dunning-Kruger Effect and Confirmation Bias: The overconfidence associated with the Dunning-Kruger effect is further bolstered by confirmation bias, which favors information that supports an individual’s inflated self-assessment. Overconfidence, in turn, enhances confirmation bias.

- Social and Cultural Influences and Confirmation Bias: Social and cultural influences reinforce confirmation bias by validating certain beliefs within a community. Confirmation bias, in turn, strengthens these influences by favoring information that aligns with prevailing social norms.

- Heuristics and Confirmation Bias: Heuristics simplify decision-making by relying on readily available information that often aligns with pre-existing beliefs, reinforcing confirmation bias. Confirmation bias supports the use of heuristics by favoring information that fits these mental shortcuts.

- Technological and Information Overload and Confirmation Bias: Information overload leads to selective information processing driven by confirmation bias. Confirmation bias, in turn, exacerbates the effects of information overload by reinforcing selective exposure to information that aligns with existing beliefs.

- Social and Cultural Influences and Motivated Reasoning: Social and cultural influences shape motivations and emotional responses, driving motivated reasoning to align new information with pre-existing frameworks. Motivated reasoning then reinforces these social and cultural influences by favoring information that supports societal norms.

- Heuristics and Anchoring Bias: Heuristics often rely on initial information, reinforcing anchoring bias. Anchoring bias strengthens reliance on heuristics by making initial information a strong reference point for subsequent judgments.

- Etc.

Importance of Understanding Epistemic Rigidity

Understanding Epistemic Rigidity is crucial for recognizing the cognitive and social barriers that impede the advancement of knowledge. This section explores how Epistemic Rigidity affects various domains, demonstrating its potential impact on knowledge progression, educational practices, and professional development. By exploring these areas, we can potentially identify strategies to mitigate the adverse effects of Epistemic Rigidity while fostering environments that promote continuous learning, critical thinking, and the adoption of new ideas.

Impeding Knowledge Advancement

Epistemic Rigidity can significantly hinder the progression of knowledge in various fields. By understanding how these cognitive biases interact, we can better identify the barriers to intellectual growth and the adoption of new ideas.

Enhancing Educational Practices

In educational settings, awareness of Epistemic Rigidity can inform teaching strategies that promote flexibility and openness to new information. Educators can design curricula and pedagogical approaches that challenge students to question their assumptions, critically evaluate sources, and remain open to evolving knowledge.

Improving Professional Practices

In professional contexts, particularly in fields that rapidly evolve, such as medicine, technology, and science, understanding Epistemic Rigidity can lead to more effective practices. Professionals can be encouraged to engage in lifelong learning, interdisciplinary collaboration, and evidence-based decision-making to overcome cognitive biases and improve outcomes.

Other Potential Applications for Epistemic Rigidity Theory

The theory of Epistemic Rigidity is multifaceted and appears to be industry-neutral. Hence, the theory can be applied to various fields and contexts. These might include:

Business and Leadership: In business environments, Epistemic Rigidity can impact decision-making processes and organizational culture. Leaders can apply this theory to foster a culture of innovation and evidence-based decision-making. By recognizing and mitigating cognitive biases such as anchoring bias and the Dunning-Kruger effect, organizations can work to promote a more dynamic and responsive approach to market changes and challenges.

Technology and Innovation: In fields driven by technological advancements, such as engineering and software development, Epistemic Rigidity can affect how teams approach problem-solving and innovation. By promoting interdisciplinary collaboration and continuous learning, organizations can overcome biases like the Einstein effect (deference to authority) and heuristics (mental shortcuts) that may impede progress and innovation.

Public Policy and Governance: Regarding public policy and governance, understanding Epistemic Rigidity is crucial for developing effective policies and initiatives. Policymakers can use this theory to critically evaluate existing policies, challenge conventional wisdom, and incorporate new evidence into decision-making processes. By addressing biases such as cognitive dissonance and social influences, policymakers can enhance policy outcomes and responsiveness to societal needs.

Psychology and Behavioral Science: Within psychology and behavioral science, Epistemic Rigidity provides insights into human decision-making and behavior change. Researchers can apply this theory to study how individuals process and integrate new information and to develop interventions that promote cognitive flexibility and adaptive learning strategies.

Legal and Judicial Systems: In legal contexts, Epistemic Rigidity can influence how judges, lawyers, and jurors evaluate evidence and make decisions. By understanding biases like confirmation bias and motivated reasoning, legal professionals can strive for more objective and equitable outcomes in judicial proceedings.

Each of these applications demonstrates how Epistemic Rigidity theory can be used to enhance practices, improve decision-making, and foster continuous learning across diverse fields and disciplines.

Tactics to Mitigate Epistemic Rigidity in Practice

It should be emphasized that Epistemic Rigidity poses a subtle yet formidable threat to personal and collective growth. In a somewhat paradoxical twist, it often hides behind the guise of certainty, making it especially difficult to identify in ourselves. A critical danger lies in the tendency to project such rigidity onto others while remaining blind to its grip on our own thinking. It must be stressed that those who weaponize the accusation of rigidity against dissenting views risk entrenching themselves even further, ultimately weakening their ability to dismantle their misconceptions or challenge the conditioning that shapes their beliefs. Without vigilance and genuine introspection, this phenomenon perpetuates intellectual stagnation and undermines trust and dialogue, creating barriers to meaningful progress.

Addressing Epistemic Rigidity requires targeted strategies that foster openness to new information, critical thinking, and continuous learning. This section outlines practical approaches for mitigating Epistemic Rigidity across various contexts, including educational settings, professional development, and organizational practices. By implementing these tactics, we can create environments that challenge entrenched beliefs, promote intellectual humility, and encourage the adoption of evidence-based practices. Additionally, overall strategies for individuals provide personal actions that can help overcome cognitive biases and facilitate knowledge advancement.

Educational Settings

- Curriculum Design: Incorporate critical thinking and problem-solving exercises that challenge students to move beyond familiar solutions (Bailin, 2002; Birgili, 2015; Choi, 2004; da Silva Almeida & Rodrigues Franco, 2011; Eales-Reynolds et al., 2013).

- Teaching Methods: Use diverse and updated sources of information to reduce the impact of the Einstein effect and anchoring bias (Darling-Hammond & Bransford, 2007; Perel & Vairo, 1968; Wolk, 2017).

- Assessment Strategies: Implement assessments that evaluate students’ ability to adapt and apply new information rather than merely recalling established knowledge (Martin et al., 2012; Martin et al., 2013).

Professional Development

- Continuous Learning: Promote ongoing education and professional development opportunities that keep professionals updated with the latest advancements in their fields. Additionally, personalized leadership development can contribute to personal leadership, which typically fosters adaptation to change, knowledge acquisition, agility, and strategy.

- Interdisciplinary Collaboration: Encourage collaboration across disciplines to bring fresh perspectives and challenge entrenched beliefs.

- Mentorship Programs: Develop mentorship programs that emphasize the importance of staying informed about current research and best practices.

Organizational Practices

- Evidence-Based Decision-Making: Foster a culture that prioritizes empirical evidence and regular review of practices to ensure alignment with the latest knowledge.

- Critical Feedback: Create feedback mechanisms that evaluate established procedures critically and encourage innovative thinking.

- Diverse Perspectives: Cultivate an inclusive environment that values diverse perspectives and experiences, reducing the likelihood of cognitive biases dominating decision-making processes.

Overall Strategies for Individuals to Overcome Epistemic Rigidity

- Cultivate Intellectual Humility: Encourage individuals to acknowledge the limitations of their knowledge and remain open to new information and perspectives (Barrett,2017; Whitcomb et al., 2017).

- Engage in Reflective Practice: Promote regular reflection (reflective practice, critical reflection, and design thinking) on one’s own cognitive processes and decision-making strategies to identify and mitigate biases (Liedtka, 2015).

- Foster a Growth Mindset: Emphasize the importance of learning and development, encouraging individuals to view challenges and failures as opportunities for growth.

- Utilize Diverse Information Sources: Ensure access to a wide range of information sources to counteract the effects of the Einstein effect and anchoring bias.

- Promote Critical Inquiry: Develop environments that value questioning and critical analysis, encouraging individuals to challenge established norms and seek new evidence.

- Implement Specific Educational Interventions: Incorporate training in critical thinking, exposure to diverse viewpoints, and the use of case studies to highlight the evolution of knowledge.

While the preceding strategies and practices are helpful, there is one more tactic worth mentioning. Indeed, the danger of projecting rigidity onto others, deepening our entrenchment, and remaining blind to its influence on our own thinking cannot be overstated. To truly benefit from this framework, introspection is necessary. In this context, it helps to begin by asking yourself: Am I genuinely open to being wrong, or am I using the concept of Epistemic Rigidity to invalidate perspectives that challenge me? This question is not merely reflective; it is transformative. Acknowledging and embracing this distinction is a decisive step toward breaking free from cognitive conditioning and advancing toward accurate understanding.

Mass Epistemic Rigidity: More Than an Individual Problem

Mass Epistemic Rigidity refers to the collective cognitive phenomenon where groups or communities resist discarding outdated or inaccurate information despite compelling evidence to the contrary. This resistance is often fueled by a combination of cognitive biases, social dynamics, political influences, and cultural norms that reinforce existing beliefs and discourage critical examination of new information. As a result, entire populations can become entrenched in misinformation, impeding progress and innovation. Overcoming mass epistemic rigidity requires not only individual cognitive flexibility but also systemic changes in how information is disseminated, evaluated, and accepted within communities.

Projecting Rigidity Onto Others

One of the most insidious aspects of Epistemic Rigidity is its tendency to mask itself. Individuals are often quick to recognize rigidity in others while remaining blind to their own cognitive entrenchment. This irony is particularly dangerous in discussions surrounding misinformation, politics, and ideology, where accusations of closed-mindedness frequently fly in both directions. The danger lies in the idea that the more certain an individual is of their own intellectual flexibility, the less likely they are to scrutinize their own biases, reinforcing the very rigidity they seek to critique in others.

This phenomenon is exacerbated by social reinforcement, where like-minded groups validate each other’s perspectives while dismissing outsiders as misinformed or deluded. The resulting cycle of self-affirmation fosters an environment where meaningful debate is replaced by performative disagreement—where individuals engage not to understand opposing views but to reaffirm their own correctness. Such interactions do little to advance collective knowledge and instead deepen ideological divisions.

Limitations

Despite its comprehensive scope, Epistemic Rigidity as a theoretical framework has several limitations that warrant consideration. First, while the framework integrates various cognitive biases and social factors, the interactions between these factors in specific contexts may vary and require further empirical validation. Second, the applicability of Epistemic Rigidity across diverse disciplines and cultural contexts needs exploration to understand potential variations in cognitive barriers to knowledge advancement. Additionally, the framework predominantly focuses on individual and cognitive factors, possibly overlooking broader systemic influences such as institutional norms or policy frameworks that shape knowledge dissemination and acceptance. Finally, the practical implementation of strategies to mitigate Epistemic Rigidity requires careful consideration of organizational dynamics and resources, which may pose challenges in real-world settings.

Discussion

Epistemic Rigidity offers a more nuanced understanding of the cognitive barriers hindering knowledge advancement and innovation. By synthesizing multiple cognitive biases and social influences, the framework provides a robust foundation for addressing the persistence of outdated knowledge and promoting evidence-based practices. The framework’s emphasis on various cognitive biases demonstrates the complexity of human decision-making and the challenges inherent in updating beliefs. Moreover, the integration of social factors like motivated reasoning and cultural influences shows the importance of context in shaping knowledge acceptance and dissemination.

Future research could focus on empirically testing the framework’s predictions across different fields and cultural settings to validate its applicability and effectiveness. Longitudinal studies and cross-cultural investigations would likely be of benefit as well. Additionally, exploring the role of organizational structures, leadership, and educational interventions in mitigating Epistemic Rigidity would enhance practical strategies for fostering a culture of continuous learning and critical inquiry. Addressing Epistemic Rigidity can contribute to advancing knowledge and improving decision-making processes in academia, professional practice, and policy-making.

Conclusion

Epistemic Rigidity provides a comprehensive framework for understanding the cognitive barriers to knowledge advancement. By integrating the Einstellung effect, the Einstein effect, the Dunning-Kruger effect, anchoring bias, and additional cognitive and social factors, this theory demonstrates the complex interplay of biases that impede intellectual growth and provides a strong foundation from which to approach the various complications that arise in our modern world. Recognizing and addressing these biases is crucial for fostering environments that promote continuous learning, critical thinking, and innovation. Epistemic Rigidity theory has practical applications in educational, professional, and organizational settings, showing its importance in advancing knowledge and improving practices.

Acknowledgments:

I extend my heartfelt gratitude to the National Leaderology Association for their encouragement and valuable insights throughout the development of this research. Their support has been instrumental in shaping my ideas and refining the theoretical framework presented in this paper.

Conflict of Interest:

Dr. David Robertson declares no financial or personal relationships that could potentially bias this work within the scope of this research. This includes but is not limited to employment, consultancies, honoraria, grants, patents, royalties, stock ownership, or any other relevant connections or affiliations. He affirms that the research presented in this paper is conducted in an unbiased manner, and there are no conflicts of interest to disclose.

References:

Azzopardi, L. (2021, March). Cognitive biases in search: a review and reflection of cognitive biases in Information Retrieval. In Proceedings of the 2021 conference on human information interaction and retrieval (pp. 27-37).

Bailin, S. (2002). Critical thinking and science education. Science & education, 11, 361-375.

Barrett, J. L. (2017). Intellectual humility. The Journal of Positive Psychology, 12(1), 1-2.

Berkowitz, A. D. (2004). The social norms approach: Theory, research, and annotated bibliography. https://citeseerx.ist.psu.edu/document?repid=rep1&type=pdf&doi=b488512ae6728b40eeb3a2ea957d89739cdebcb2

Bilalić, M., McLeod, P., & Gobet, F. (2010). The mechanism of the Einstellung (set) effect: A pervasive source of cognitive bias. Current Directions in Psychological Science, 19(2), 111-115.

Birgili, B. (2015). Creative and critical thinking skills in problem-based learning environments. Journal of Gifted education and creativity, 2(2), 71-80.

Blass, T. (1991). Understanding behavior in the Milgram obedience experiment: The role of personality, situations, and their interactions. Journal of personality and social psychology, 60(3), 398.

Carpenter, C. J. (2019). Cognitive dissonance, ego-involvement, and motivated reasoning. Annals of the International Communication Association, 43(1), 1-23.

Carson, A. B. (2015). Public discourse in the age of personalization: Psychological explanations and political implications of search engine bias and the filter bubble. Journal of Science Policy & Governance, 7(1).

Chapman, G. B., & Johnson, E. J. (1994). The limits of anchoring. Journal of Behavioral Decision Making, 7(4), 223-242.

Choi, H. (2004). The effects of PBL (Problem-Based Learning) on the metacognition, critical thinking, and problem solving process of nursing students. Journal of Korean Academy of Nursing, 34(5), 712-721.

Cialdini, R. B., & Goldstein, N. J. (2004). Social influence: Compliance and conformity. Annu. Rev. Psychol., 55, 591-621.

Dai, B., Ali, A., & Wang, H. (2020). Exploring information avoidance intention of social media users: A cognition–affect–conation perspective. Internet Research, 30(5), 1455-1478.

Dale, S. (2015). Heuristics and biases: The science of decision-making. Business Information Review, 32(2), 93-99.

Darling-Hammond, L., & Bransford, J. (Eds.). (2007). Preparing teachers for a changing world: What teachers should learn and be able to do. John Wiley & Sons.

Datta, P., Whitmore, M., & Nwankpa, J. K. (2021). A perfect storm: social media news, psychological biases, and AI. Digital Threats: Research and Practice, 2(2), 1-21.

da Silva Almeida, L., & Rodrigues Franco, A. H. (2011). Critical thinking: Its relevance for education in a shifting society. REVISTA DE PSICOLOGÍA

Dhanda, B. (2020). Cognitive dissonance, attitude change and ways to reduce cognitive dissonance: a review study. Journal of Education, Society and Behavioural Science, 33(6), 48-54.

Dunning, D., Johnson, K., Ehrlinger, J., & Kruger, J. (2003). Why people fail to recognize their own incompetence. Current directions in psychological science, 12(3), 83-87.

Dunning, D. (2011). The Dunning–Kruger effect: On being ignorant of one’s own ignorance. In Advances in experimental social psychology (Vol. 44, pp. 247-296). Academic Press.

Eales-Reynolds, L. J., Judge, B., McCreery, E., & Jones, P. (2013). Critical thinking skills for education students. Learning Matters.

Elliot, A. J., & Devine, P. G. (1994). On the motivational nature of cognitive dissonance: Dissonance as psychological discomfort. Journal of personality and social psychology, 67(3), 382.

Ellis, J. J., & Reingold, E. M. (2014). The Einstellung effect in anagram problem solving: evidence from eye movements. Frontiers in psychology, 5, 98105.

Gass, R. H., & Seiter, J. S. (2022). Persuasion: Social influence and compliance gaining. Routledge.

Gigerenzer, G., & Gaissmaier, W. (2011). Heuristic decision making. Annual review of psychology, 62, 451-482.

Golman, R., Hagmann, D., & Loewenstein, G. (2017). Information avoidance. Journal of economic literature, 55(1), 96-135.

Greif, A. (1994). Cultural beliefs and the organization of society: A historical and theoretical reflection on collectivist and individualist societies. Journal of political economy, 102(5), 912-950.

Harmon-Jones, E. (2000). A cognitive dissonance theory perspective on the role of emotion in the maintenance and change of beliefs and attitudes. Emotions and beliefs, 185-211.

Harmon-Jones, E., & Mills, J. (2019). An introduction to cognitive dissonance theory and an overview of current perspectives on the theory. https://www.apa.org/pubs/books/Cognitive-Dissonance-Intro-Sample.pdf

Hoogeveen, S., Haaf, J. M., Bulbulia, J. A., Ross, R. M., McKay, R., Altay, S., … & van Elk, M. (2022). The Einstein effect provides global evidence for scientific source credibility effects and the influence of religiosity. Nature Human Behaviour, 6(4), 523-535.

Howell, J. L., & Shepperd, J. A. (2013). Behavioral obligation and information avoidance. Annals of Behavioral Medicine, 45(2), 258-263.

Kahan, D. M. (2013). Ideology, motivated reasoning, and cognitive reflection. Judgment and Decision making, 8(4), 407-424.

Klayman, J. (1995). Varieties of confirmation bias. Psychology of learning and motivation, 32, 385-418.

Kozyreva, A., Lewandowsky, S., & Hertwig, R. (2020). Citizens versus the internet: Confronting digital challenges with cognitive tools. Psychological Science in the Public Interest, 21(3), 103-156.

Kruglanski, A. W., & Webster, D. M. (2018). Motivated closing of the mind:“Seizing” and “freezing”. The motivated mind, 60-103.

Lehner, P. E., Adelman, L., Cheikes, B. A., & Brown, M. J. (2008). Confirmation bias in complex analyses. IEEE Transactions on Systems, Man, and Cybernetics-Part A: Systems and Humans, 38(3), 584-592.

Lewandowsky, S., Ecker, U. K., Seifert, C. M., Schwarz, N., & Cook, J. (2012). Misinformation and its correction: Continued influence and successful debiasing. Psychological science in the public interest, 13(3), 106-131.

Lieder, F., Griffiths, T. L., M. Huys, Q. J., & Goodman, N. D. (2018). The anchoring bias reflects rational use of cognitive resources. Psychonomic bulletin & review, 25, 322-349.

Liedtka, J. (2015). Perspective: Linking design thinking with innovation outcomes through cognitive bias reduction. Journal of product innovation management, 32(6), 925-938.

Markus, H. R., & Kitayama, S. (2014). Culture and the self: Implications for cognition, emotion, and motivation. In College student development and academic life (pp. 264-293). Routledge.

Martin, A. J., Nejad, H., Colmar, S., & Liem, G. A. D. (2012). Adaptability: Conceptual and empirical perspectives on responses to change, novelty and uncertainty. Journal of Psychologists and Counsellors in Schools, 22(1), 58-81.

Martin, A. J., Nejad, H. G., Colmar, S., & Liem, G. A. D. (2013). Adaptability: How students’ responses to uncertainty and novelty predict their academic and non-academic outcomes. Journal of Educational Psychology, 105(3), 728.

Messing, S., & Westwood, S. J. (2012). How social media introduces biases in selecting and processing news content. ResearchGate. https://www. researchgate. net/publication/265673993_How_Social_Media_Introduces_Biases_in_Selecting_and_ Processing_News_Content.

Miller, G. P., & Rosenfeld, G. (2010). Intellectual hazard: How conceptual biases in complex organizations contributed to the crisis of 2008. Harv. JL & Pub. Pol’y, 33, 807.

Narayan, B., Case, D. O., & Edwards, S. L. (2011). The role of information avoidance in everyday‐life information behaviors. Proceedings of the American Society for Information Science and Technology, 48(1), 1-9.

Nickerson, R. S. (1998). Confirmation bias: A ubiquitous phenomenon in many guises. Review of general psychology, 2(2), 175-220.

Oswald, M. E., & Grosjean, S. (2004). Confirmation bias. Cognitive illusions: A handbook on fallacies and biases in thinking, judgement and memory, 79, 83.

Perel, W. M., & Vairo, P. D. (1968, November). Professor, Is Your Experience Outdated?. In The Educational Forum (Vol. 33, No. 1, pp. 39-44). Taylor & Francis Group.

Rebugio, A. B. (2013). Bias and perception: How it affects our judgment in decision making and analysis. Small Wars Journal, Jul, 12(7), 36am.

Sanchez, C., & Dunning, D. (2018). Overconfidence among beginners: Is a little learning a dangerous thing?. Journal of personality and Social Psychology, 114(1), 10.

Schlösser, T., Dunning, D., Johnson, K. L., & Kruger, J. (2013). How unaware are the unskilled? Empirical tests of the “signal extraction” counterexplanation for the Dunning–Kruger effect in self-evaluation of performance. Journal of Economic Psychology, 39, 85-100.

Schmitt, J. B., Debbelt, C. A., & Schneider, F. M. (2018). Too much information? Predictors of information overload in the context of online news exposure. Information, Communication & Society, 21(8), 1151-1167.

Shore, B. (1998). Culture in mind: Cognition, culture, and the problem of meaning. Oxford University Press.

Smith, B. G. (2002). Information overload. Encyclopedia of Library and Information Science: Volume 72: Supplement 35, 217.

Sunstein, C. R. (2003). Hazardous heuristics. University of Chicago Law School https://chicagounbound.uchicago.edu/cgi/viewcontent.cgi?article=1227&context=law_and_economics

Sweeny, K., Melnyk, D., Miller, W., & Shepperd, J. A. (2010). Information avoidance: Who, what, when, and why. Review of general psychology, 14(4), 340-353.

Tresselt, M. E., & Leeds, D. S. (1953). The Einstellung effect in immediate and delayed problem-solving. The Journal of General Psychology, 49(1), 87-95.

Von der Weth, C., Abdul, A., Fan, S., & Kankanhalli, M. (2020, October). Helping users tackle algorithmic threats on social media: a multimedia research agenda. In Proceedings of the 28th ACM international conference on multimedia (pp. 4425-4434).

Whitcomb, D., Battaly, H., Baehr, J., & Howard-Snyder, D. (2017). Intellectual humility. Philosophy and Phenomenological Research, 94(3), 509-539.

Wolk, S. (2017). Educating students for an outdated world. Phi Delta Kappan, 99(2), 46-52.

Wood, W. (2000). Attitude change: Persuasion and social influence. Annual review of psychology, 51(1), 539-570.